Your Greatest AI Risk?

Compliance Theatre

Fines are coming. Meet the toughest AI regulations head on with hard evidence. Patent-pending, always-on assurance technology, built for GRC teams.

Evolving regulations. Technical complexity. Constant flux. Multiple stakeholders.

AI & Algorithmic testing is no easy job.

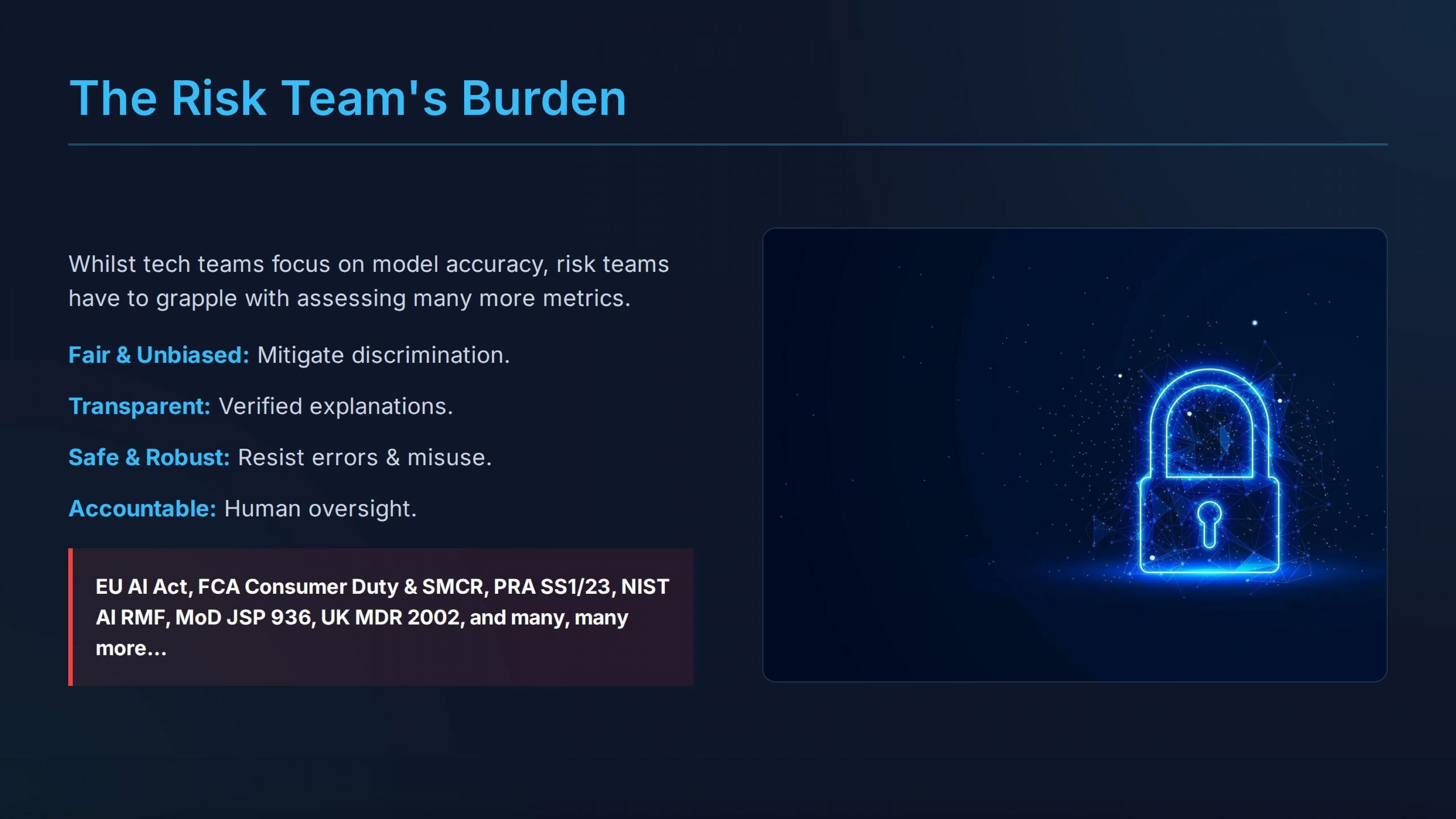

Lawmakers, users, and society have high expectations of AI system owners, particularly in regulated sectors. Their demands are impacting corporate policies across security, risk, data, IT, procurement, and insurance policies.

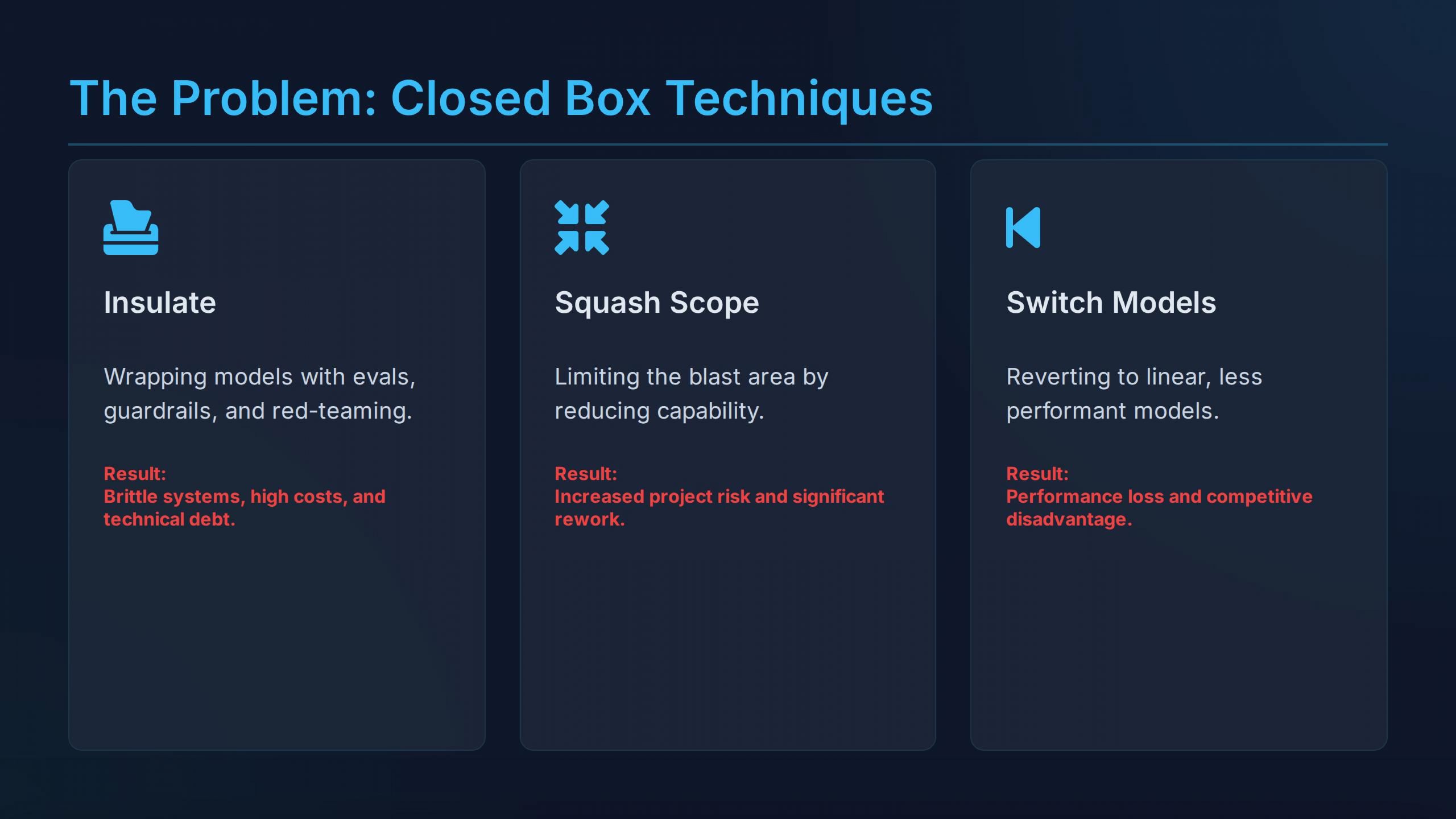

Whilst consensus is building around AI Governance (the processes to follow), Technical AI Assurance (tests implemented at the model level) has not kept pace.

AI & Algorithmic testing is no easy job.

EU AI ACT, NIST AI RMF, GDPR Art. 22, Sectoral regulations (FCA, MHRA), ISO 42000

Head of Risk, GRC team, Chief Data Officer, CTO, AI/ML, Data, Tech team, Product manager, External AI Governance professionals, Certification bodies, Internal/External Auditors, Regulators.

Impact evaluation, Bias audit, Compliance audit, Certification, Conformity assessment, Performance testing, Formal verification.

Planning, Data preparation, Model development, System development, Evaluations, Validation and verification, Operation, monitoring and reporting.

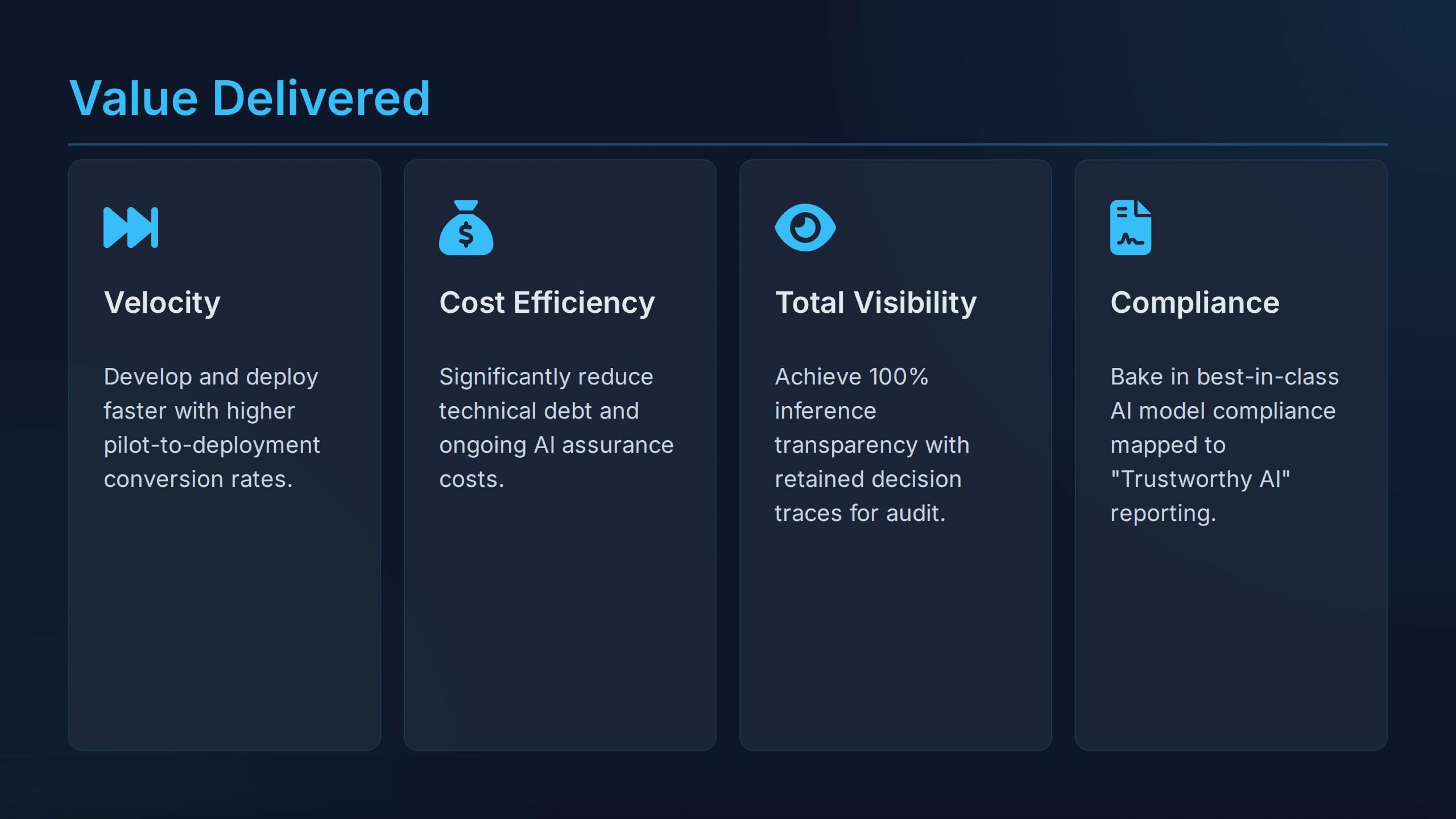

Solved by adding TIKOS™ to your pipelines

Use throughout the AI system lifecycle

TIKOS™ Evaluate

TIKOS™ Explain

TIKOS™ Explore

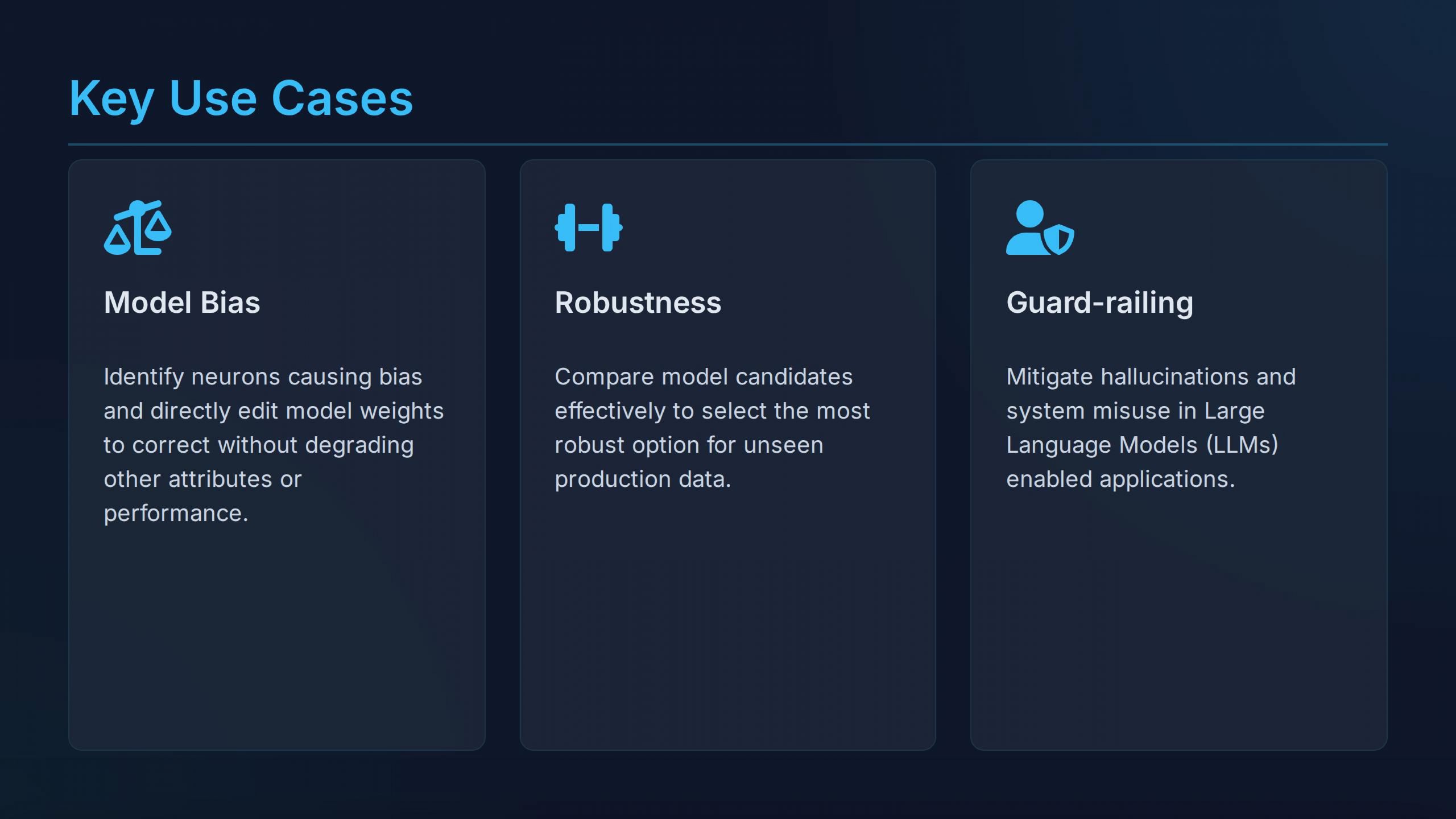

TIKOS™ addresses the core challenges for trustworthy AI development, deployment, procurement, and monitoring

Fair & Unbiased

Transparent & Explainable

Accurate, Safe & Robust

Accountable

Features

Regulations 1st approach

TIKOS™ is engineered to deliver AI Assurance in regulated, high-stakes environments for all dominant regulations and standards frameworks.

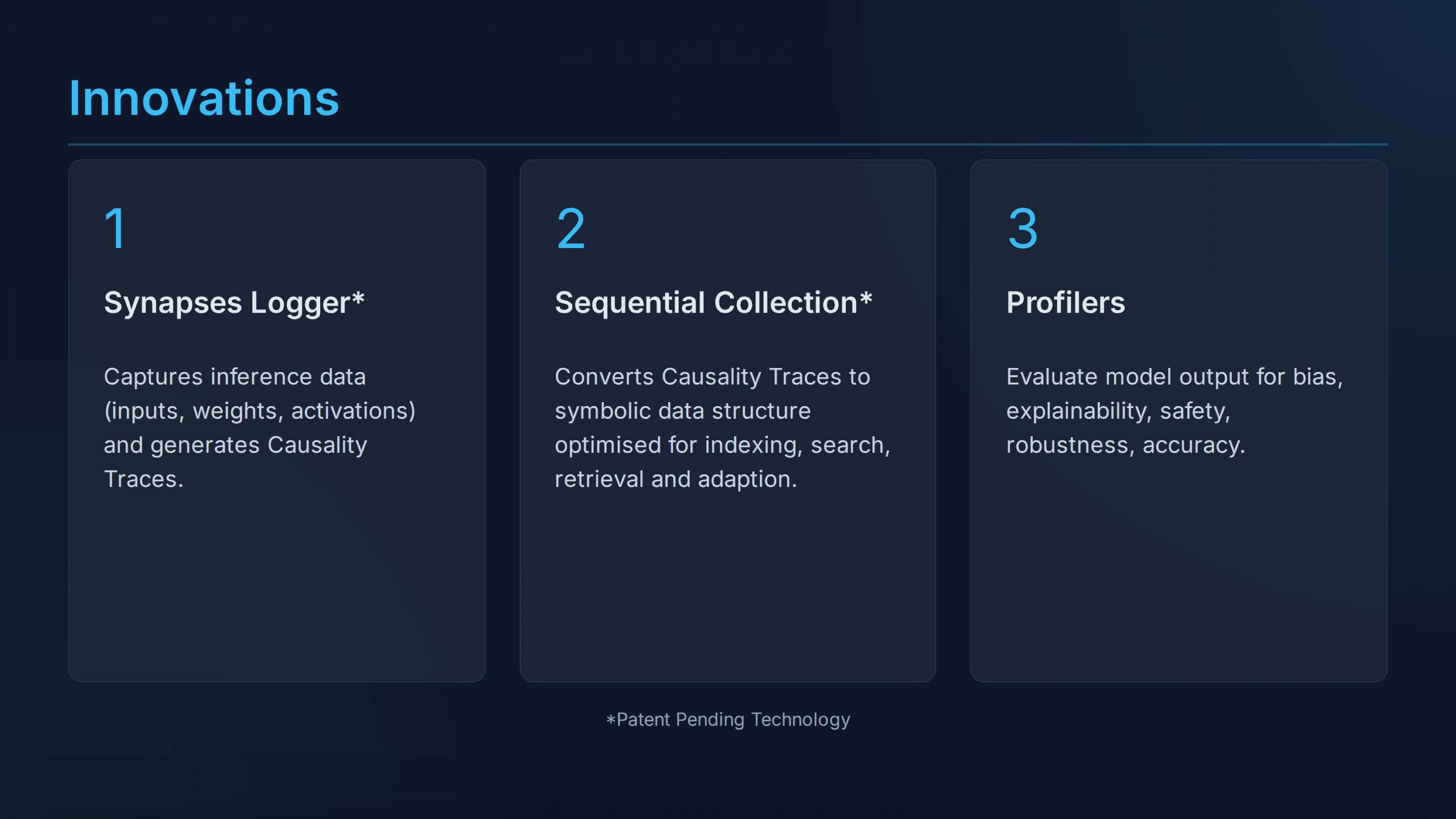

Proprietary technology

TIKOS™ is built from the ground up on original (PhD) research in AI transparency, reasoning and explainability.

Open architecture

TIKOS™ is agnostic to model class, developer framework, tooling and deployment infrastructure.

Expert-in-the-loop

TIKOS™ leverages organizational know-how through an ‘expert-in-the-loop’ system design.

Flexible deployment

TIKOS™ can be deployed through SaaS by APIs, SDK and platform access; including private cloud.